Advanced Shell Scripting: From Chaos to Control - Understanding the Art of Professional Automation

Advanced Shell Scripting: From Chaos to Control

Picture this: It's 3 AM, your server goes down, and instead of frantically SSH-ing into boxes and running commands manually, your monitoring system has already detected the issue, restarted the service, sent you a notification, and logged everything for analysis. This isn't magic—it's the power of advanced shell scripting.

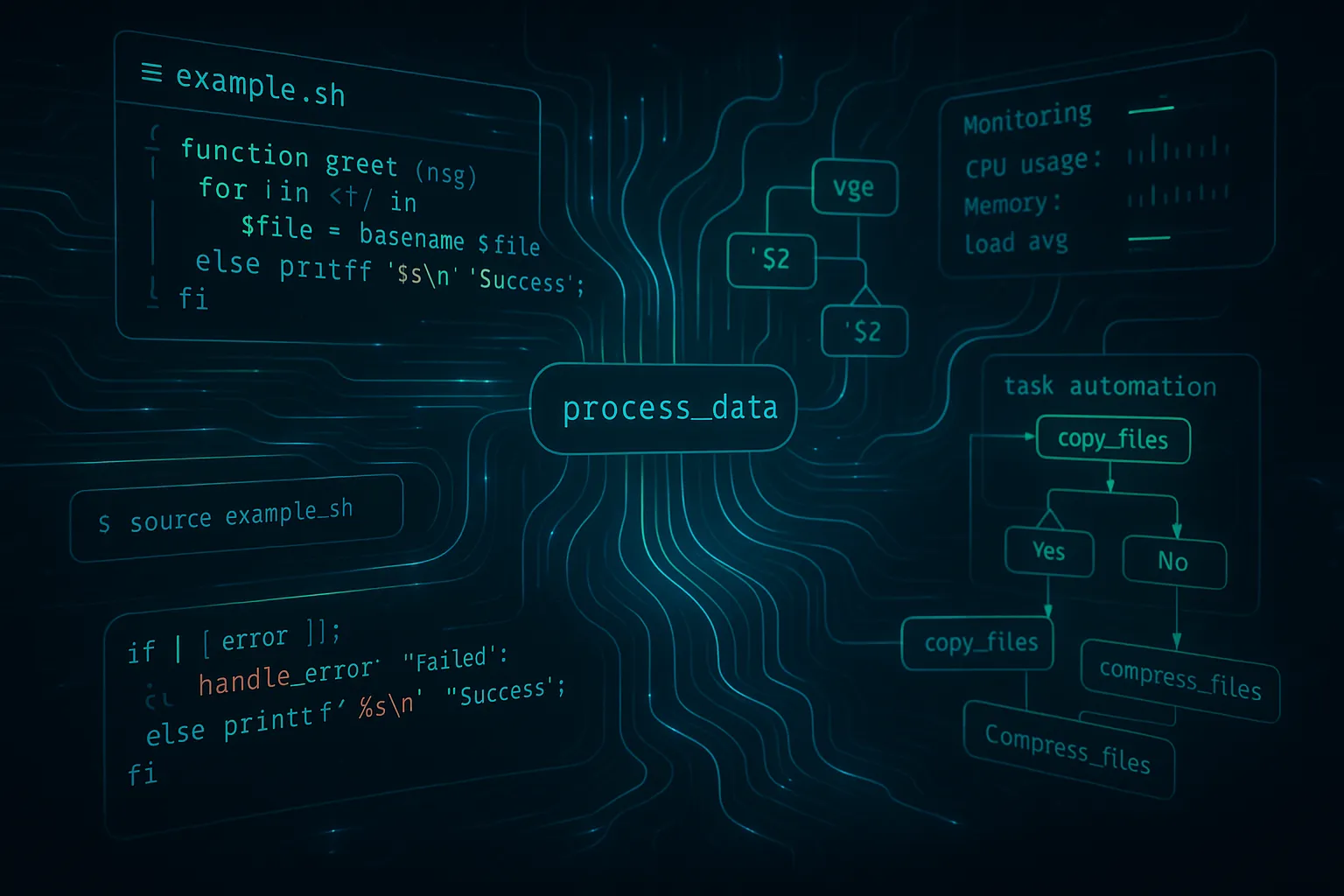

Today, we're diving into the art and science of professional shell scripting. We'll explore how the right patterns and principles can transform fragile, one-off scripts into robust automation systems that form the backbone of modern infrastructure.

The Journey from Script Kiddie to Automation Engineer

Why Most Scripts Fail in Production

Before we dive into solutions, let's understand the problem. Most scripts start simple:

# The "quick fix" that becomes permanent

ps aux | grep nginx | awk '{print $2}' | xargs kill -9This works... until it doesn't. What happens when:

grep matches the grep process itself?The painful reality: Most scripts are written for the happy path but fail catastrophically when reality intervenes.

The Advanced Shell Scripting Mindset

Professional shell scripting is about defensive programming—writing code that assumes everything will go wrong and handles it gracefully. It's the difference between:

Amateur approach: "This script works on my machine" Professional approach: "This script handles edge cases, logs failures, and provides meaningful feedback"

The transformation involves three core principles:

1. Predictability: Scripts should behave consistently across different environments 2. Observability: You should always know what your scripts are doing and why they failed 3. Maintainability: Code should be readable and modifiable by your future self (and teammates)

The Architecture of Professional Scripts

Understanding the Function Paradigm

Think of functions as the LEGO blocks of shell scripting. Instead of writing monolithic scripts that do everything, you build small, focused functions that can be combined in different ways.

The Conceptual Shift:

# Instead of this monolithic approach:

# check_disk_space_and_clean_logs_and_restart_service.sh

# Build modular functions:

check_disk_space() { ... }

clean_old_logs() { ... }

restart_service_safely() { ... }

send_notification() { ... }

# Then compose them:

main() {

if ! check_disk_space; then

clean_old_logs

restart_service_safely "nginx"

send_notification "Disk cleanup performed"

fi

}Why This Matters:

The Error Handling Philosophy

Advanced shell scripting treats errors as first-class citizens. Instead of hoping nothing goes wrong, you plan for failure and handle it gracefully.

The Error Handling Hierarchy:

1. Prevention: Validate inputs before using them 2. Detection: Use proper exit codes and checks 3. Response: Handle errors appropriately (retry, fallback, abort) 4. Communication: Log what happened and notify relevant parties 5. Recovery: Clean up and return to a known good state

Example of Professional Error Handling:

# Instead of hoping curl works:

curl https://api.example.com/data

# Professional approach:

fetch_api_data() {

local url="$1"

local max_retries=3

local retry_count=0

while [[ $retry_count -lt $max_retries ]]; do

if response=$(curl -sf "$url" 2>/dev/null); then

echo "$response"

return 0

fi

((retry_count++))

log_warning "API call failed (attempt $retry_count/$max_retries)"

[[ $retry_count -lt $max_retries ]] && sleep 5

done

log_error "Failed to fetch data from $url after $max_retries attempts"

return 1

}Smart Configuration Management

Professional scripts are environment-aware. They adapt to different systems, users, and configurations without hardcoded values.

The Configuration Strategy:

1. Sensible Defaults: Scripts work out of the box 2. Environment Variables: Allow easy overrides 3. Configuration Files: Support complex setups 4. Command-Line Arguments: Enable script reuse

Example Architecture:

# Default configuration

DEFAULT_TIMEOUT=30

DEFAULT_RETRY_COUNT=3

DEFAULT_LOG_LEVEL="INFO"

# Allow environment overrides

TIMEOUT=${TIMEOUT:-$DEFAULT_TIMEOUT}

RETRY_COUNT=${RETRY_COUNT:-$DEFAULT_RETRY_COUNT}

LOG_LEVEL=${LOG_LEVEL:-$DEFAULT_LOG_LEVEL}

# Command-line arguments override everything

while [[ $# -gt 0 ]]; do

case $1 in

--timeout) TIMEOUT="$2"; shift 2 ;;

--retries) RETRY_COUNT="$2"; shift 2 ;;

--verbose) LOG_LEVEL="DEBUG"; shift ;;

*) echo "Unknown option: $1"; exit 1 ;;

esac

doneBuilding a Mental Model: The System Monitoring Example

Let's explore advanced concepts through a practical example: building a system monitoring script that demonstrates professional practices without drowning in implementation details.

The Design Phase: Thinking Before Coding

Question 1: What should we monitor?

Question 2: How should we handle failures?

Question 3: How do we make it maintainable?

The Modular Architecture

# Instead of one giant script, think in modules:

# Core monitoring functions

monitor_cpu_usage()

monitor_memory_usage()

monitor_disk_space()

monitor_services()

# Alerting system

send_email_alert()

send_slack_notification()

log_alert()

# Configuration management

load_config()

validate_config()

set_defaults()

# Utility functions

log_message()

handle_error()

cleanup_on_exit()

# Main orchestration

main()The Professional Touch: What Makes It Advanced

1. Intelligent Thresholds Instead of hardcoded values, use adaptive thresholds:

# Beginner: Fixed threshold

if [[ $cpu_usage -gt 80 ]]; then

# Advanced: Context-aware thresholds

calculate_threshold() {

local base_threshold=80

local current_hour=$(date +%H)

# Higher threshold during business hours

if [[ $current_hour -ge 9 && $current_hour -le 17 ]]; then

echo $((base_threshold + 10))

else

echo $base_threshold

fi

}2. Self-Documenting Code

# Instead of cryptic variable names:

t=80; m=90; d=95

# Use descriptive names that explain intent:

CPU_WARNING_THRESHOLD=80

MEMORY_CRITICAL_THRESHOLD=90

DISK_EMERGENCY_THRESHOLD=953. Graceful Degradation

# If email fails, try alternative notification methods

send_notification() {

local message="$1"

if ! send_email "$message"; then

log_warning "Email notification failed, trying Slack"

if ! send_slack "$message"; then

log_warning "Slack notification failed, logging locally"

log_alert "$message"

fi

fi

}The Professional Patterns Every Developer Should Know

Pattern 1: The Guard Clause Strategy

Concept: Validate everything upfront and fail fast

validate_environment() {

# Check required commands

for cmd in curl jq systemctl; do

if ! command -v "$cmd" >/dev/null 2>&1; then

log_error "Required command not found: $cmd"

return 1

fi

done

# Check required files

for file in "/etc/passwd" "/proc/loadavg"; do

if [[ ! -r "$file" ]]; then

log_error "Cannot read required file: $file"

return 1

fi

done

return 0

}

main() {

# Fail fast if environment isn't ready

validate_environment || exit 1

# Now we can proceed with confidence

run_monitoring_checks

}Pattern 2: The State Machine Approach

Concept: Complex scripts can be modeled as state machines

# States: STARTING -> MONITORING -> ALERTING -> RECOVERING -> MONITORING

SCRIPT_STATE="STARTING"

transition_to() {

local new_state="$1"

log_debug "State transition: $SCRIPT_STATE -> $new_state"

SCRIPT_STATE="$new_state"

}

handle_current_state() {

case "$SCRIPT_STATE" in

"STARTING")

initialize_monitoring

transition_to "MONITORING"

;;

"MONITORING")

if detect_issues; then

transition_to "ALERTING"

fi

;;

"ALERTING")

send_alerts

transition_to "RECOVERING"

;;

"RECOVERING")

attempt_recovery

transition_to "MONITORING"

;;

esac

}Pattern 3: The Plugin Architecture

Concept: Make scripts extensible through plugins

# Core framework provides the structure

run_monitoring_plugins() {

local plugin_dir="/etc/monitoring/plugins"

if [[ -d "$plugin_dir" ]]; then

for plugin in "$plugin_dir"/*.sh; do

if [[ -x "$plugin" ]]; then

log_debug "Running plugin: $(basename "$plugin")"

if ! "$plugin"; then

log_warning "Plugin failed: $(basename "$plugin")"

fi

fi

done

fi

}

# Plugins are simple, focused scripts:

# /etc/monitoring/plugins/check-database.sh

# /etc/monitoring/plugins/check-website.sh

# /etc/monitoring/plugins/check-certificates.shThe Art of Script Evolution

From Prototype to Production

Stage 1: The Proof of Concept

Stage 2: The Minimum Viable Script

Stage 3: The Production Script

Making Scripts Team-Friendly

1. Self-Documenting Help System

show_help() {

cat << 'EOF'

System Monitor v2.0

USAGE:

monitor.sh [OPTIONS] [COMMAND]

COMMANDS:

start Start continuous monitoring

check Run single check

status Show current status

OPTIONS:

--config FILE Use custom config file

--verbose Enable debug output

--dry-run Show what would be done

EXAMPLES:

monitor.sh check --verbose

monitor.sh start --config /etc/custom.conf

EOF

}2. Configuration Discovery

find_config_file() {

# Check multiple locations in order of preference

local config_locations=(

"${CONFIG_FILE:-}" # Command line

"${HOME}/.config/monitor/config" # User config

"/etc/monitor/config" # System config

"${SCRIPT_DIR}/config" # Script directory

)

for location in "${config_locations[@]}"; do

if [[ -n "$location" && -r "$location" ]]; then

echo "$location"

return 0

fi

done

return 1

}Performance and Security Considerations

Performance Tips:

Security Principles:

The Community Aspect: Learning and Sharing

Learning from Others

Great Resources for Advanced Shell Scripting:

Contributing Back

Ways to Help the Community:

Building Your Scripting Portfolio

Showcase Projects: 1. System Monitoring Suite: Demonstrate comprehensive monitoring 2. Deployment Automation: Show CI/CD integration skills 3. Log Analysis Tools: Display data processing abilities 4. Security Scanning Scripts: Highlight security awareness 5. Backup and Recovery: Prove reliability engineering skills

Modern Integration: Shell Scripts in the Cloud Era

Container-Aware Scripting

Modern scripts need to understand containerized environments:

detect_environment() {

if [[ -f /.dockerenv ]]; then

echo "container"

elif [[ -n "${KUBERNETES_SERVICE_HOST:-}" ]]; then

echo "kubernetes"

elif [[ -n "${AWS_REGION:-}" ]]; then

echo "aws"

else

echo "traditional"

fi

}

adjust_for_environment() {

local env=$(detect_environment)

case "$env" in

"container")

# Adjust monitoring for container limits

use_cgroup_metrics

;;

"kubernetes")

# Use k8s APIs for service discovery

discover_services_via_k8s

;;

"aws")

# Integrate with CloudWatch

send_metrics_to_cloudwatch

;;

esac

}GitOps Integration

Version Control for Scripts:

Deployment Strategies:

The Path to Mastery

Building Your Skill Progression

Beginner to Intermediate: 1. Master basic syntax and commands 2. Learn to use functions effectively 3. Understand pipes and redirection 4. Practice regular expressions

Intermediate to Advanced: 1. Implement robust error handling 2. Design modular architectures 3. Master process management 4. Learn performance optimization

Advanced to Expert: 1. Contribute to open-source projects 2. Mentor other developers 3. Design framework-level solutions 4. Integrate with modern infrastructure

Daily Practice Recommendations

Week 1-2: Refactor existing scripts to use functions Week 3-4: Add comprehensive error handling to all scripts Week 5-6: Implement configuration management patterns Week 7-8: Build a complete monitoring solution Week 9-10: Add testing and documentation Week 11-12: Share and get feedback from the community

Conclusion: The Transformation Journey

Advanced shell scripting isn't about memorizing complex syntax or writing the longest scripts. It's about thinking systematically and applying software engineering principles to automation challenges.

The key transformations:

🏗️ From Linear to Modular: Break problems into small, focused functions 🛡️ From Hopeful to Defensive: Plan for failures and handle them gracefully 📊 From Silent to Observable: Make scripts communicate what they're doing ⚙️ From Rigid to Flexible: Design for different environments and use cases 🤝 From Solo to Collaborative: Write code that others can understand and maintain

Your Action Plan

1. Start Small: Pick one existing script and refactor it using the patterns we discussed 2. Practice Daily: Spend 15 minutes each day improving your scripting skills 3. Join Communities: Participate in forums, Discord servers, and local meetups 4. Share Knowledge: Write about your experiences and help others learn 5. Stay Current: Follow developments in DevOps and automation tools

The Bigger Picture

Remember, shell scripting is not just about automation—it's about amplifying human capabilities. When you write a robust monitoring script, you're not just checking system health; you're enabling your team to focus on higher-value work while the script handles the routine vigilance.

Every function you write with proper error handling is a small investment in the reliability of your infrastructure. Every configurable parameter is a gift to your future self and teammates. Every clear log message is documentation that will save someone hours of debugging.

The journey from basic to advanced shell scripting mirrors the journey from code that works to code that matters. It's about building automation that doesn't just solve today's problems but adapts to tomorrow's challenges.

---

🚀 Continue Your Linux Journey

This is Part 20 of our comprehensive Linux mastery series - Advanced Automation Techniques!

Previous: Performance Monitoring - Master system monitoring and optimization

Congratulations! You've completed the comprehensive Linux mastery series!

📚 Complete Linux Series Navigation

🎓 Series Complete!

🏆 You've Mastered:

🔗 Related Advanced Topics

🚀 What's Next?

With this Linux foundation, you're ready for:

---

Tomorrow, we'll explore how these shell scripting skills integrate with modern container orchestration and cloud-native environments. The foundation you're building today will serve you well as we dive into Kubernetes and infrastructure as code.

🔗 Connect with me: GitHub | LinkedIn

What's your biggest shell scripting challenge? Share your experiences and let's learn together! 💬