Docker for Backend Developers: Part 5 - Production Deployment Strategies

Docker for Backend Developers: Part 5 - Production Deployment Strategies

So you've mastered containers, built multi-service apps, and optimized for production. Now comes the real test: deploying to production where millions of users depend on your services being up 24/7.

This is where I learned that knowing Docker and knowing how to deploy Docker are two completely different skills.

The Day My "Perfect" Deployment Failed

Picture this: I had spent weeks perfecting our Docker setup. Beautiful multi-stage builds, optimized images, comprehensive health checks. Everything worked flawlessly in staging.

Then we deployed to production during peak traffic.

5:02 PM: Deployment started 5:03 PM: Old containers stopped 5:04 PM: New containers starting 5:05 PM: Site down. Panic mode.

The new containers took 3 minutes to become healthy, but we killed the old ones immediately. Those 3 minutes felt like hours as support tickets flooded in.

That's when I learned that container deployment isn't just about the containers—it's about the transition.

The Philosophy of Zero-Downtime Deployment

The fundamental insight that changed everything for me: overlapping deployments.

Traditional deployment thinking: 1. Stop old version 2. Start new version 3. Hope it works

Container deployment thinking: 1. Start new version alongside old 2. Verify new version is healthy 3. Gradually shift traffic 4. Stop old version only when safe

This shift in mindset—from replacement to transition—is what separates hobby projects from production systems.

Blue-Green Deployment: The Safety Net

Blue-Green is the deployment pattern I wish I'd learned first. The concept is beautifully simple:

Blue Environment: Currently serving production traffic Green Environment: New version, being prepared

The magic happens in the switch—you flip traffic from Blue to Green instantly, but keep Blue ready as a fallback.

My Blue-Green Learning Experience

Here's how I implement it with Docker Compose:

# docker-compose.blue.yml

version: '3.8'

services:

api-blue:

image: my-api:v1.2.3

environment:

- ENVIRONMENT=blue

labels:

- "traefik.http.routers.api.rule=Host(`api.mycompany.com`)"

# docker-compose.green.yml

version: '3.8'

services:

api-green:

image: my-api:v1.2.4

environment:

- ENVIRONMENT=green

labels:

- "traefik.http.routers.api.rule=Host(`api.mycompany.com`)"The deployment process becomes:

1. Deploy Green: docker-compose -f docker-compose.green.yml up -d

2. Test Green: Run health checks and smoke tests

3. Switch Traffic: Update load balancer to point to Green

4. Monitor: Watch metrics for any issues

5. Cleanup Blue: Once confident, stop Blue environment

If anything goes wrong, switching back takes seconds, not minutes.

Rolling Deployments: The Gradual Approach

Sometimes Blue-Green feels too aggressive—you want to roll out changes gradually. That's where rolling deployments shine.

The Canary Pattern

Start with a small percentage of traffic on the new version:

# Start with 10% traffic on new version

docker service update --replicas 10 api_old

docker service create --replicas 1 api_new

# Gradually increase new, decrease old

docker service update --replicas 8 api_old

docker service update --replicas 3 api_new

# Eventually migrate completely

docker service update --replicas 0 api_old

docker service update --replicas 10 api_newThis approach taught me that deployment isn't binary—it's a spectrum of risk management.

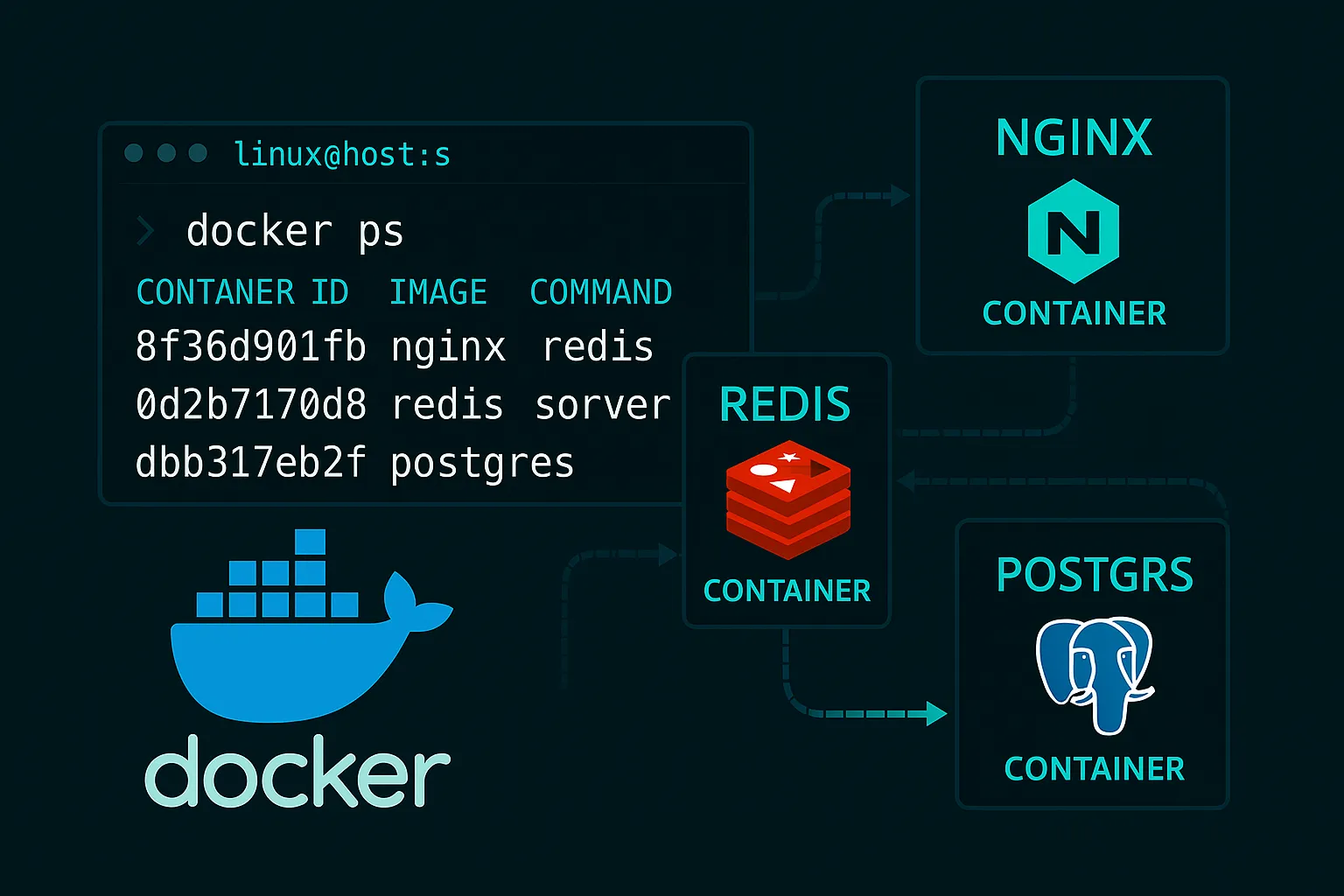

Container Orchestration: When Docker Compose Isn't Enough

Docker Compose works great for single-machine deployments, but production often means multiple servers, automatic scaling, and complex networking.

The Orchestration Mindset Shift

With orchestration, you stop thinking about individual containers and start thinking about desired state:

Instead of: "Run 3 containers of my API" Think: "Ensure 3 healthy instances of my API are always running, somewhere in the cluster"

Kubernetes: The Production Reality

Most production Docker deployments eventually lead to Kubernetes. Here's why it became inevitable for me:

Service Discovery: Containers come and go, but services need stable endpoints Auto-scaling: Traffic spikes shouldn't require manual intervention Health Management: Failed containers should restart automatically Rolling Updates: Deployments should happen without service interruption

A Simple Kubernetes Deployment

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-api

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

selector:

matchLabels:

app: my-api

template:

metadata:

labels:

app: my-api

spec:

containers:

- name: api

image: my-api:v1.2.4

ports:

- containerPort: 8080

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 5This YAML represents a contract with the cluster: "Keep 3 healthy instances running, update them without downtime, restart any that fail."

Infrastructure as Code: The Deployment Pipeline

The biggest production lesson I learned: deployments should be boring.

GitOps: The Game Changer

Instead of manual deployments, I moved to GitOps:

1. Code Change: Developer pushes to main branch 2. CI Pipeline: Builds and tests Docker image 3. Image Registry: Pushes tagged image to registry 4. GitOps Agent: Detects new image, updates deployment 5. Cluster: Automatically rolls out new version

# .github/workflows/deploy.yml

name: Deploy to Production

on:

push:

branches: [main]

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Build and Push Docker Image

run: |

docker build -t myregistry.com/api:${{ github.sha }} .

docker push myregistry.com/api:${{ github.sha }}

- name: Update Kubernetes Deployment

run: |

kubectl set image deployment/my-api api=myregistry.com/api:${{ github.sha }}

kubectl rollout status deployment/my-apiThis approach eliminated the "works on my machine" problem for deployments too.

Monitoring and Observability: The Production Essentials

Containers are ephemeral—they come and go. Traditional monitoring approaches break down when your infrastructure is constantly changing.

The Three Pillars of Observability

Metrics: What's happening in aggregate? Logs: What happened when something went wrong? Traces: How did a request flow through the system?

Container-Native Monitoring

# prometheus-config.yml

scrape_configs:

- job_name: 'my-api'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: trueThe key insight: Monitor the behavior, not the containers. Containers are implementation details.

Scaling Strategies: Beyond Adding More Containers

Scaling isn't just about replicas—it's about understanding your bottlenecks.

Horizontal vs Vertical Scaling

Horizontal: More container instances Vertical: Bigger container instances

The decision depends on your application's characteristics:

# CPU-bound service (horizontal scaling)

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "500m"

memory: "256Mi"

# Memory-bound service (vertical scaling)

resources:

requests:

cpu: "100m"

memory: "1Gi"

limits:

cpu: "200m"

memory: "2Gi"Auto-scaling Based on Metrics

# hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-api-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-api

minReplicas: 3

maxReplicas: 50

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70This taught me that good scaling is predictive, not reactive.

Security in Production: The Non-Negotiables

Production security isn't just about container security—it's about the entire deployment pipeline.

Image Supply Chain Security

# Sign images

docker trust sign myregistry.com/api:v1.2.4

# Verify signatures in deployment

export DOCKER_CONTENT_TRUST=1

docker pull myregistry.com/api:v1.2.4Runtime Security

# Security context in Kubernetes

securityContext:

runAsNonRoot: true

runAsUser: 65534

readOnlyRootFilesystem: true

allowPrivilegeEscalation: false

capabilities:

drop:

- ALLThe principle: Default deny, explicit allow.

The Production Mindset

After years of production deployments, I've learned that success isn't about perfect technology—it's about graceful failure.

Design for Failure

- Circuit Breakers: Fail fast when dependencies are down

The Observability Loop

1. Monitor: Continuously watch system health 2. Alert: Notify when things go wrong 3. Investigate: Understand the root cause 4. Fix: Address the underlying issue 5. Improve: Update monitoring based on what you learned

What Production Taught Me

Production deployments taught me that the technology is just the beginning. The real skill is operational excellence:

- Predictable deployments that happen without drama

Docker containers are just the foundation. Production is about building systems that you trust.

The Journey Continues

You've now gone from Docker basics to production deployment strategies. But this is where the journey really begins:

- Service Mesh: For complex microservice communication

But remember: master the fundamentals first. Every advanced pattern builds on the solid foundation you've built through this series.

---

You now have the complete toolkit for Docker in production. The real learning happens when you deploy your first production system and watch it succeed.