Docker for Backend Developers: Part 4 - Multi-Service Development with Docker Compose

Docker for Backend Developers: Part 4 - Multi-Service Development with Docker Compose

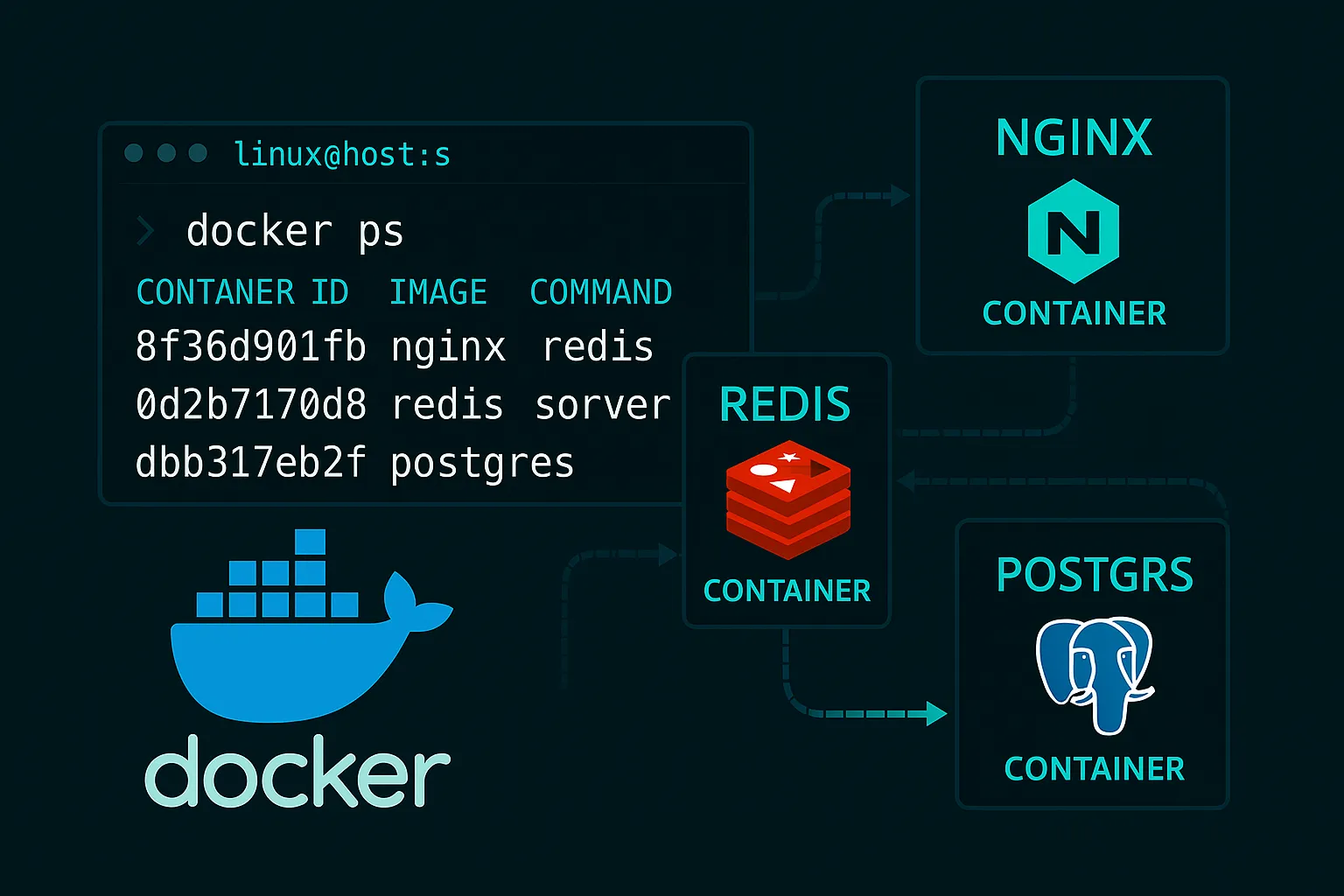

You've mastered individual containers, but real backend applications are ecosystems—APIs talking to databases, workers processing queues, caches speeding up responses. Managing this complexity with individual containers is like conducting an orchestra by shouting at each musician individually.

Docker Compose changed everything for me. It's the difference between chaos and harmony in multi-service development.

The Orchestration Problem I Faced

Picture building a user management system:

My pre-Compose workflow was insane:

# Terminal 1: Start database

docker run -d -p 5432:5432 -e POSTGRES_PASSWORD=secret postgres

# Terminal 2: Start Redis

docker run -d -p 6379:6379 redis

# Terminal 3: Start API (after manually finding database IP)

docker run -d -p 8080:8080 -e DB_HOST=172.17.0.2 my-api

# Terminal 4: Start worker (same manual IP hunting)

docker run -d -e DB_HOST=172.17.0.2 -e REDIS_HOST=172.17.0.3 my-worker

# Terminal 5: Start proxy

docker run -d -p 80:80 -v $(pwd)/nginx.conf:/etc/nginx/nginx.conf nginxEvery morning, I'd spend 20 minutes just getting my development environment running. The IP addresses would change, services would start in the wrong order, and I'd inevitably forget some crucial environment variable.

The Docker Compose Revelation

Docker Compose solved this with a simple philosophy: describe your application as a collection of services, not individual containers.

The Mental Model Shift

Before: "I need to run 5 different containers and wire them together" After: "I have one application with 5 services"

This shift is profound because you start thinking about service relationships rather than container management.

Understanding Docker Compose Architecture

The Service-Centric Model

In Compose, everything revolves around services:

services:

api: # Service name becomes hostname

# Service definition

database: # Other services can reach this as "database"

# Service definition

cache: # Available as "cache" to other services

# Service definitionThe magic: Service names become DNS hostnames automatically. Your API can connect to database:5432 without knowing the actual IP address.

Network Isolation by Default

Compose creates an isolated network for your application. Services can talk to each other, but they're isolated from other Docker networks and the host (unless you explicitly expose ports).

┌─────────────────────────────────────┐

│ app_default network │

│ │

│ ┌─────┐ ┌──────────┐ ┌────────┐ │

│ │ api │ │ database │ │ worker │ │

│ └─────┘ └──────────┘ └────────┘ │

│ │ │ │

└─────┼───────────────────────┼──────┘

│ │

Port 8080 (internal only)

exposed to hostBuilding a Real Multi-Service Application

Let me walk you through building a complete backend system that demonstrates all the key concepts.

The Application: URL Shortener Service

Services we'll build:

Project Structure

url-shortener/

├── docker-compose.yml

├── api/

│ ├── Dockerfile

│ ├── main.go

│ └── go.mod

├── worker/

│ ├── Dockerfile

│ ├── worker.py

│ └── requirements.txt

├── nginx/

│ └── nginx.conf

└── init-db/

└── schema.sqlThe Docker Compose Configuration

version: '3.8'

services:

api:

build: ./api

ports:

- "8080:8080"

environment:

DB_HOST: database

DB_USER: urlshortener

DB_PASSWORD: secret123

DB_NAME: urls

REDIS_HOST: cache

depends_on:

database:

condition: service_healthy

cache:

condition: service_started

restart: unless-stopped

worker:

build: ./worker

environment:

DB_HOST: database

DB_USER: urlshortener

DB_PASSWORD: secret123

DB_NAME: urls

REDIS_HOST: cache

depends_on:

database:

condition: service_healthy

cache:

condition: service_started

restart: unless-stopped

database:

image: postgres:15

environment:

POSTGRES_DB: urls

POSTGRES_USER: urlshortener

POSTGRES_PASSWORD: secret123

volumes:

- postgres_data:/var/lib/postgresql/data

- ./init-db:/docker-entrypoint-initdb.d

ports:

- "5432:5432" # For development access

healthcheck:

test: ["CMD-SHELL", "pg_isready -U urlshortener -d urls"]

interval: 10s

timeout: 5s

retries: 5

cache:

image: redis:7-alpine

command: redis-server --appendonly yes

volumes:

- redis_data:/data

ports:

- "6379:6379" # For development access

proxy:

image: nginx:alpine

ports:

- "80:80"

volumes:

- ./nginx/nginx.conf:/etc/nginx/nginx.conf

depends_on:

- api

volumes:

postgres_data:

redis_data:Understanding the Compose Features

depends_on with conditions:

depends_on:

database:

condition: service_healthyThis ensures the API doesn't start until the database is not just running, but actually healthy and accepting connections.

Service networking:

environment:

DB_HOST: database # Uses service name as hostnameVolume persistence:

volumes:

- postgres_data:/var/lib/postgresql/dataNamed volumes persist data between container restarts.

Health checks:

healthcheck:

test: ["CMD-SHELL", "pg_isready -U urlshortener -d urls"]Defines how Compose knows if a service is truly ready.

Service Communication Patterns

Database Connection Pattern

// api/main.go - Database connection

func connectDB() *sql.DB {

dbHost := os.Getenv("DB_HOST") // "database"

dbUser := os.Getenv("DB_USER") // "urlshortener"

dbPassword := os.Getenv("DB_PASSWORD") // "secret123"

dbName := os.Getenv("DB_NAME") // "urls"

dsn := fmt.Sprintf("host=%s user=%s password=%s dbname=%s sslmode=disable",

dbHost, dbUser, dbPassword, dbName)

db, err := sql.Open("postgres", dsn)

if err != nil {

log.Fatal("Failed to connect to database:", err)

}

return db

}The beauty: No hardcoded IPs, no service discovery complexity. Just use the service name.

Inter-Service Communication

# worker/worker.py - Connecting to multiple services

import psycopg2

import redis

import os

def connect_services():

# Database connection

db = psycopg2.connect(

host=os.getenv('DB_HOST'), # "database"

user=os.getenv('DB_USER'),

password=os.getenv('DB_PASSWORD'),

database=os.getenv('DB_NAME')

)

# Redis connection

cache = redis.Redis(

host=os.getenv('REDIS_HOST'), # "cache"

port=6379,

decode_responses=True

)

return db, cacheLoad Balancing with Nginx

# nginx/nginx.conf

upstream api {

server api:8080;

}

server {

listen 80;

location /api/ {

proxy_pass http://api/;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

location / {

root /usr/share/nginx/html;

index index.html;

}

}Key insight: server api:8080 references the API service by name. Nginx automatically resolves this to the correct container IP.

Development Workflow with Compose

The Magic Commands

# Start entire application

docker-compose up

# Start in background

docker-compose up -d

# View logs from all services

docker-compose logs

# View logs from specific service

docker-compose logs api

# Scale a service

docker-compose up -d --scale worker=3

# Stop everything

docker-compose down

# Stop and remove volumes (careful!)

docker-compose down -vDevelopment-Specific Overrides

For development, I use compose overrides:

# docker-compose.override.yml (automatically loaded)

version: '3.8'

services:

api:

volumes:

- ./api:/app # Live code reloading

environment:

- DEBUG=true

- LOG_LEVEL=debug

worker:

volumes:

- ./worker:/app

environment:

- DEBUG=trueThe workflow: docker-compose up automatically merges the base compose file with the override. Code changes are immediately reflected in the running containers.

Advanced Compose Patterns

Environment-Specific Configurations

# Development

docker-compose up

# Staging

docker-compose -f docker-compose.yml -f docker-compose.staging.yml up

# Production

docker-compose -f docker-compose.yml -f docker-compose.prod.yml upSecrets Management

# docker-compose.prod.yml

services:

api:

environment:

DB_PASSWORD_FILE: /run/secrets/db_password

secrets:

- db_password

secrets:

db_password:

external: trueService Dependencies and Startup Order

services:

api:

depends_on:

database:

condition: service_healthy

cache:

condition: service_started

migration:

condition: service_completed_successfully

migration:

build: ./migration

depends_on:

database:

condition: service_healthy

restart: "no" # Run once then exitNetworking Deep Dive

Default Network Behavior

Compose creates a default network where:

Custom Networks

networks:

frontend:

driver: bridge

backend:

driver: bridge

services:

api:

networks:

- frontend

- backend

database:

networks:

- backend # Not accessible from frontend networkUse case: Isolate your database on a backend-only network for security.

Data Persistence Strategies

Named Volumes vs Bind Mounts

services:

database:

volumes:

# Named volume (Docker managed)

- postgres_data:/var/lib/postgresql/data

# Bind mount (you manage)

- ./backups:/backups

# Read-only bind mount

- ./config:/etc/config:ro

volumes:

postgres_data: # Declare named volumeWhen to use each:

Debugging Multi-Service Applications

Essential Debugging Commands

# See all running services

docker-compose ps

# Check service health

docker-compose ps database

# Follow logs in real-time

docker-compose logs -f api

# Execute commands in running service

docker-compose exec api sh

# Check network connectivity

docker-compose exec api ping database

# Inspect networks

docker network ls

docker network inspect urlshortener_defaultService Communication Testing

# Test database connectivity from API container

docker-compose exec api psql -h database -U urlshortener -d urls

# Test Redis from worker

docker-compose exec worker redis-cli -h cache ping

# Check which ports are exposed

docker-compose port api 8080Performance Considerations

Resource Allocation

services:

api:

deploy:

resources:

limits:

cpus: '1.0'

memory: 512M

reservations:

cpus: '0.5'

memory: 256MStartup Optimization

services:

database:

# Start first (other services depend on it)

cache:

# Start in parallel with database

api:

# Start after database is healthy

depends_on:

database:

condition: service_healthyThe Multi-Service Mindset

Working with Compose taught me to think differently about application architecture:

Monolithic thinking: "My application needs a database" Service thinking: "My system has an API service and a database service that communicate"

This mindset shift prepares you for:

What You've Mastered

You now understand:

More importantly, you've learned to think in terms of systems rather than individual containers.

Next Steps

You can now build and orchestrate complex applications locally, but production deployment is a different challenge entirely. Part 5 covers production deployment strategies, scaling, and the transition to container orchestration platforms.

---

Ready to deploy your multi-service applications to production? Part 5 covers production deployment strategies and scaling.