Docker for Backend Developers: Part 3 - Production-Ready Containers for Go and Python

Docker for Backend Developers: Part 3 - Production-Ready Containers for Go and Python

Your development containers work great locally, but production is a completely different challenge. I learned this the hard way when my "perfectly working" containers became security nightmares, performance bottlenecks, and operational headaches in production.

This part is about bridging the gap between "it works" and "it works reliably at scale."

The Production Wake-Up Call

Let me tell you about my first production container disaster. My Go API container worked beautifully in development—fast, clean, responsive.

Then we deployed it to production:

Day 1: Container image was 1.2GB (my manager was not happy about download times) Day 2: Security scan found 47 vulnerabilities in our base image Day 3: Container was using 500MB RAM for a simple HTTP service Day 4: App crashed and we couldn't debug it—no shell, no tools, nothing

That week taught me the fundamental truth: development containers optimize for convenience, production containers optimize for reliability.

The Theory of Production-Ready Containers

Before we build anything, let's understand what "production-ready" actually means:

The Production Requirements

Security: Minimal attack surface, no unnecessary packages, non-root execution Performance: Small images, fast startup, efficient resource usage Observability: Proper logging, health checks, debugging capabilities Reliability: Graceful failure handling, signal management, clean shutdown

The Multi-Stage Build Philosophy

The breakthrough insight that changed how I build containers: build environments and runtime environments have different needs.

Build Environment: Needs compilers, dev tools, build dependencies Runtime Environment: Needs only the binary and its runtime dependencies

Multi-stage builds let you use a large, feature-rich environment for building, then copy only what you need to a minimal runtime environment.

Building Production Go Containers

Let me show you the evolution from my naive first attempt to production-ready containers.

The Naive Approach (Don't Do This)

FROM golang:1.21

COPY . .

RUN go build -o main .

EXPOSE 8080

CMD ["./main"]Problems with this approach:

The Production Approach

# Build stage - use full Go environment

FROM golang:1.21-alpine AS builder

# Install git for private dependencies

RUN apk add --no-cache git ca-certificates tzdata

# Create app directory

WORKDIR /app

# Copy dependency files first (better caching)

COPY go.mod go.sum ./

# Download dependencies

RUN go mod download

# Copy source code

COPY . .

# Build with production optimizations

RUN CGO_ENABLED=0 GOOS=linux go build \

-ldflags='-w -s -extldflags "-static"' \

-a -installsuffix cgo \

-o main .

# Runtime stage - minimal environment

FROM scratch

# Copy CA certificates from builder

COPY --from=builder /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

# Copy timezone data

COPY --from=builder /usr/share/zoneinfo /usr/share/zoneinfo

# Copy our binary

COPY --from=builder /app/main /main

# Use non-root user

USER 65534:65534

# Health check

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD ["/main", "-health-check"]

EXPOSE 8080

ENTRYPOINT ["/main"]Understanding the Production Optimizations

FROM scratch: The ultimate minimal base—no OS, no shell, just your binary. Perfect for Go's static compilation.

Multi-stage copying: COPY --from=builder pulls only necessary files from the build stage.

Build flags explained:

-ldflags='-w -s': Strip debug info and symbol table-extldflags "-static": Force static linking CGO_ENABLED=0: Disable C bindings for pure Go binaryNon-root execution: USER 65534:65534 uses the nobody user for security.

Size comparison:

Building Production Python Containers

Python containers have different challenges—you can't compile to a static binary, so the approach differs.

The Python Production Strategy

# Build stage

FROM python:3.11-slim as builder

# Install build dependencies

RUN apt-get update && apt-get install -y \

build-essential \

&& rm -rf /var/lib/apt/lists/*

# Create virtual environment

RUN python -m venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# Copy requirements and install

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Production stage

FROM python:3.11-slim

# Install runtime dependencies only

RUN apt-get update && apt-get install -y \

&& rm -rf /var/lib/apt/lists/*

# Create non-root user

RUN groupadd -r appuser && useradd -r -g appuser appuser

# Copy virtual environment from builder

COPY --from=builder /opt/venv /opt/venv

# Set PATH to use virtual environment

ENV PATH="/opt/venv/bin:$PATH"

WORKDIR /app

# Copy application code

COPY . .

# Set ownership and switch to non-root user

RUN chown -R appuser:appuser /app

USER appuser

# Health check

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD python -c "import requests; requests.get('http://localhost:5000/health')" || exit 1

EXPOSE 5000

CMD ["python", "app.py"]Python-Specific Optimizations

Virtual environment isolation: Even in containers, virtual environments provide dependency isolation and easier copying between stages.

Slim base images: python:3.11-slim provides Python runtime without dev tools, reducing image size significantly.

Package cleanup: rm -rf /var/lib/apt/lists/* removes package lists to save space.

Security: The Non-Negotiables

Production security isn't optional. Here are the principles I've learned:

1. Never Run as Root

Why it matters: If an attacker compromises your application, they shouldn't get root access to the container.

# Create specific user

RUN groupadd -r appuser && useradd -r -g appuser appuser

USER appuser2. Minimal Base Images

The attack surface principle: Every package is a potential vulnerability.

# Prefer minimal bases

FROM alpine:3.18 # 5MB

FROM scratch # 0MB (Go only)

FROM distroless # Google's minimal images3. Regular Security Scanning

# Scan your images regularly

docker scout cves my-api:latest

docker scout recommendations my-api:latest4. Use Specific Tags, Not Latest

# Bad - latest can change

FROM python:latest

# Good - specific version

FROM python:3.11.5-slim

# Better - use digest for immutability

FROM python:3.11.5-slim@sha256:abc123...Performance Optimization: The Hidden Details

Build Context Optimization

The .dockerignore file is crucial for performance:

# .dockerignore

node_modules

.git

.gitignore

*.md

.env

coverage/

.pytest_cache/

__pycache__/

.mypy_cache/Why this matters: Docker sends the entire build context to the daemon. A large context slows down builds significantly.

Layer Caching Strategy

Order Dockerfile instructions from least to most frequently changing:

# Install system dependencies (changes rarely)

RUN apt-get update && apt-get install -y curl

# Install application dependencies (changes occasionally)

COPY requirements.txt .

RUN pip install -r requirements.txt

# Copy application code (changes frequently)

COPY . .Health Check Design

Proper health checks are critical for production orchestration:

# Simple health check

HEALTHCHECK --interval=30s --timeout=3s --start-period=5s --retries=3 \

CMD curl -f http://localhost:8080/health || exit 1

# Advanced health check with custom logic

COPY health-check.sh /usr/local/bin/

RUN chmod +x /usr/local/bin/health-check.sh

HEALTHCHECK --interval=30s --timeout=10s --start-period=30s --retries=3 \

CMD ["/usr/local/bin/health-check.sh"]Logging: The Production Lifeline

Structured Logging for Containers

Containers should log to stdout/stderr, not files:

// Go structured logging

import "github.com/sirupsen/logrus"

func main() {

logrus.SetFormatter(&logrus.JSONFormatter{})

logrus.SetLevel(logrus.InfoLevel)

logrus.WithFields(logrus.Fields{

"service": "user-api",

"version": os.Getenv("VERSION"),

}).Info("Service starting")

}# Python structured logging

import logging

from pythonjsonlogger import jsonlogger

logHandler = logging.StreamHandler()

formatter = jsonlogger.JsonFormatter()

logHandler.setFormatter(formatter)

logger = logging.getLogger()

logger.addHandler(logHandler)

logger.setLevel(logging.INFO)

logger.info("Service starting", extra={

"service": "user-api",

"version": os.environ.get("VERSION")

})Log Configuration in Docker

# Configure logging driver

docker run --log-driver=json-file \

--log-opt max-size=10m \

--log-opt max-file=3 \

my-apiSignal Handling: Graceful Shutdown

Production containers must handle termination signals properly:

// Go graceful shutdown

func main() {

server := &http.Server{Addr: ":8080"}

go func() {

server.ListenAndServe()

}()

// Wait for interrupt signal

c := make(chan os.Signal, 1)

signal.Notify(c, os.Interrupt, syscall.SIGTERM)

<-c

// Graceful shutdown

ctx, cancel := context.WithTimeout(context.Background(), 30*time.Second)

defer cancel()

server.Shutdown(ctx)

}# Python graceful shutdown

import signal

import sys

def signal_handler(sig, frame):

print('Gracefully shutting down...')

# Cleanup code here

sys.exit(0)

signal.signal(signal.SIGINT, signal_handler)

signal.signal(signal.SIGTERM, signal_handler)Resource Management

Setting Resource Limits

# In Dockerfile (documentation only)

LABEL resource.memory="512Mi"

LABEL resource.cpu="500m"# At runtime

docker run --memory=512m --cpus=0.5 my-apiUnderstanding Container Resource Behavior

Memory limits: Container gets killed (OOMKilled) if it exceeds the limit CPU limits: Container gets throttled but not killed Disk I/O: Can be limited but requires specific storage drivers

The Production Container Checklist

Before deploying any container to production, I run through this checklist:

Security:

Performance:

Observability:

Reliability:

What You've Mastered

You now understand:

More importantly, you've developed the production mindset: every choice in your Dockerfile has runtime implications.

Next Steps

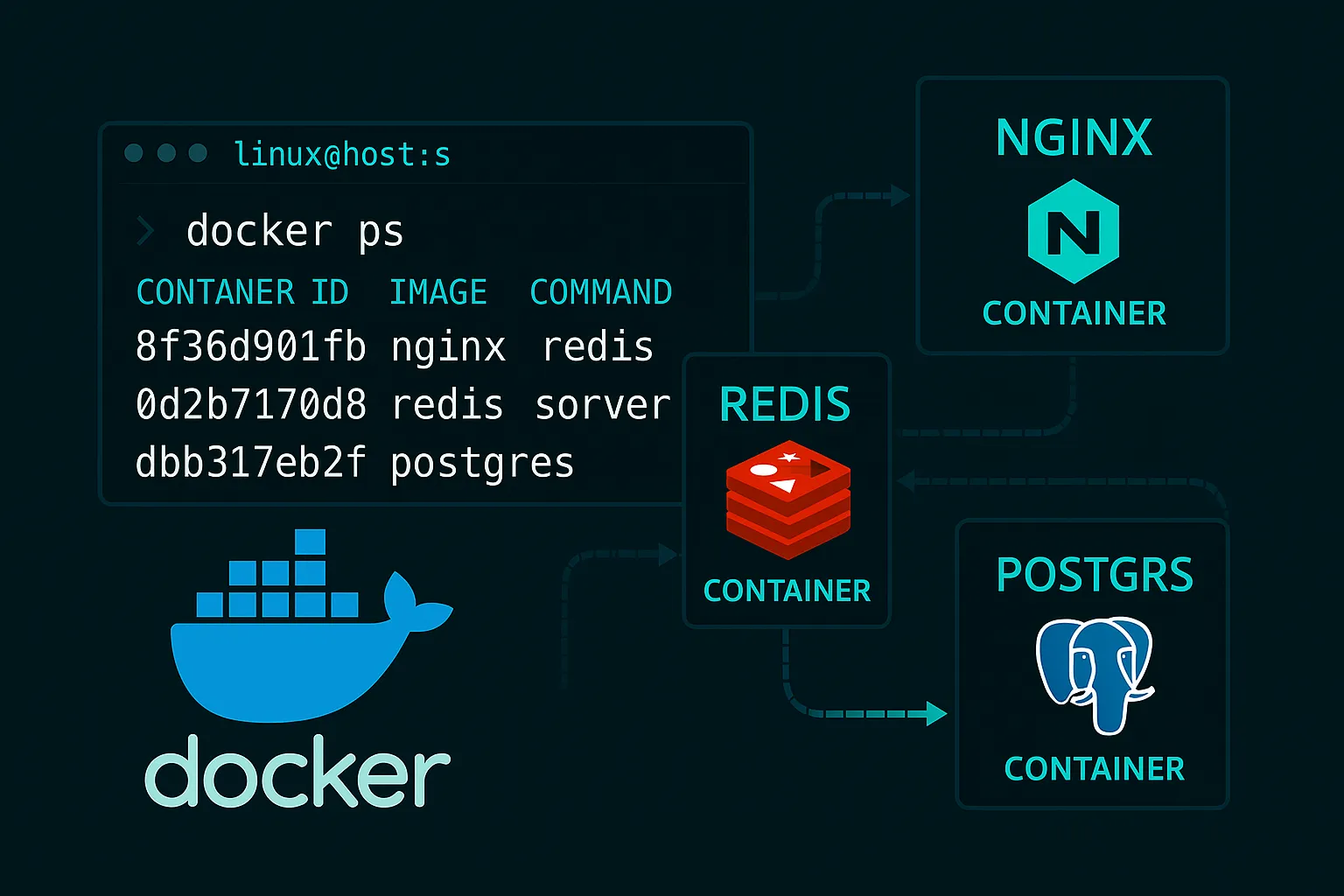

You can now build secure, efficient containers, but real applications need multiple services working together. Part 4 covers orchestrating these containers with Docker Compose for complex applications.

---

Ready to orchestrate multiple containers? Part 4 covers multi-service development with Docker Compose.